rTest

The testing tool for FME Workspaces

Olivier Gayte: CEO and founder, Veremes. rTest Designer.

Vincent Aalalou: rTest Product Manager, Veremes.

Published November 29, 2021

rTest

The testing tool for FME Workspaces

1 Introduction

It is a commonplace to say that developments must be tested before being put into production. Moreover, the main development languages all offer tools or libraries dedicated to the definition and automation of tests.

In the ETL world, this problem also exists: “How can we be sure that a process produces the right result before putting it into production?”

Various independent tools try to answer this question and some ETL offer their own extension on the theme of quality(1).

In fact, these tools are more interested in the quality of the data than in the correct functioning of the workspace: they will detect that an attribute has a null value but not that files are missing in the result. And of course they are unable to check a CAD, image or point cloud result…

What about FME?

This topic is not widely discussed in the FME community outside of Kevin Weller who has devoted several articles to this topic (2, 3) and proposed a working method and some transformers on FME Hub.

The subject is important because quality, or rather non-quality, represents a significant cost for users of digital tools (4). In practice, the tests can often be summed up as a visual consultation of the result in FME Data Inspector or in the target database. While this method can be considered for one-time execution, it is difficult to accept when the permanence of the workspace must be assured.

Faced with this problem, the company Veremes developed in 2016 for its own needs a testing tool dedicated to FME scripts and named rTest. Although released under an open source license and available on GitHub, this product has remained confidential because it was undocumented and suffered from many flaws.

The version 2 of rTest which has just been released is intended for a wider audience. The product is still free, Open Source and benefits from a complete documentation in English and French and a technical support from Veremes. The code has been completely rewritten and completed with a web application to consult the reports.

This article describes the principles of rTest and the associated development method.

2 How does rTest work?

rTest is a test environment that allows you to automate the execution of FME worspaces and to check whether the observed result is consistent with the expected result. The execution of a test scenario generates an HTML report that provides information on the correct execution of the workspaces (status, duration, RAM, log) and on the conformity of the results.

rTest is non-intrusive, it does not require the use of transformers or specific connectors in the workspace core. Therefore, it does not present any risk of performance or behavior degradation.

rTest consists of three components:

- A definition schema that determines a syntax, or rather a grammar that allows to write test scenarios in the form of an XML document: rtest.xsd

- An FME workspace that allows to execute the test scenarios: scenarioPlayer.fmw

- A web application (HTML+JS application) that allows to visualize the result of a control as a dynamic HTML application: reportTemplate.html

The rtest.xsd schema and the web application resources (.js, .css…) are transparently available online. For users with access to the Internet, rTest can be summarized as scenarioPlayer.fmw and reportTemplate.html.

The test scenarios

A test scenario is an XML document describing a list of tests to be executed sequentially. Each test is itself composed of one or more FME workspaces with its parameters and checks to be applied to consider that the test is validated.

Editing an XML document is never fun but with the help of a good XML editor and using the rtest.xsd application schema it is an easy exercise to implement.

scenarioPlayer.fmw est le traitement FME qui est chargé de lire les scénarios et d’exécuter les différents tests les uns après les autres, d’abord en lançant les traitements FME avec les bons paramètres, puis en vérifiant si les résultats produits sont conformes aux valeurs attendues.

Les rapports produits par scenarioPlayer sont horodatés et stockés dans des répertoires spécifiques pour faciliter la conservation des résultats et la persistance des liens entre les rapports HTML et les fichiers de log même en cas de déplacement vers un serveur de stockage.

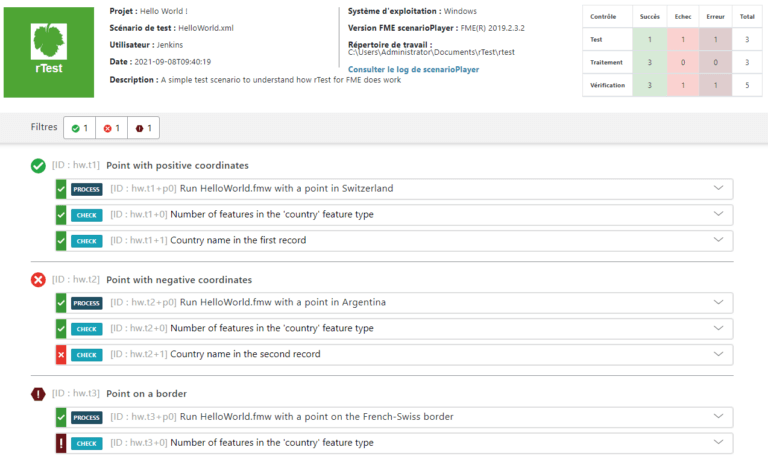

The HTML report

It is a dynamic HTML application that allows you to directly filter the failed tests and get the status of each check.

In fact, the execution of scenarioPlayer.fmw produces an HTML report which presents all the information related to a control: status of the tests, execution time, logs content…

3 What is a compliant FME workspace?

We usually distinguish two main families of tests to determine the compliance of a software: functional tests whose objective is to verify that the workspace produces the expected results and non-functional tests that will focus on performance, scalability, security…

With rTest we are clearly in the category of functional tests and we want to check whether the data produced by the execution of an FME workspace conforms to expectations in all circumstances.

In fact, it’s almost mission impossible: all it takes is one negative test to prove that a workspace doesn’t work, whereas you would have to check an infinite number of cases to prove that it works every time. We are therefore reduced to validating a “probable good functioning” with a certain level of uncertainty.

In practice, four levels of verification can be distinguished. These are not exclusive, as a test scenario can combine different checks.

- The status of the workspace

We just check if the workspace ends with the status “SUCCESSFUL” or “FAILED”.

- Enumeration of features

We count the features produced and compare the result to the expected number.

The count can be global or by feature type.

- The value of the features

This is the most detailed level of verification. It must ensure that the features produced comply with expectations. The verification can be on one or more attributes and on the geometry and concern a few features or be exhaustive.

- Management of non-conformities

How should the workspace behave when it has to perform an impossible operation?

For example, if a parameter is inconsistent, if the process does not have write permission on a file or if it just needs to calculate the root of -1?

It is the responsibility of the developer to identify these error cases and to define the behavior to adopt: output in error, writing in a log file, deleting intermediate results beforehand…

This behavior must be verifiable during the compliance test.

4 The method behind the tool

In favor of test-assisted developments

To get the most out of it, the test tool should not be just another tool for the developers but should be at the heart of the development project.

In a traditional project, with manual testing, the time required for verification is important and represents a significant burden in terms of time and cost.

During the life of the project, the development team does not usually have the capacity to perform tests that are both thorough and regularly executed. It must sacrifice completeness or frequency and often both.

In the end, it is often up to the user to validate the deliverable before it goes into production by trying to identify cases of non-compliance.

With a test tool, the right method is to identify the test cases very early in the project by involving the users in this work. This makes it possible to produce the test data sets before or during development and to write the test scenarios from the first versions of the processing.

The developer will be able to rely on these scenarios to check the correct operation continuously and devote time to tasks that are sometimes neglected: performance optimization, code cleaning, industrialization, without being afraid of introducing regressions in his work.

Test-assisted development thus makes it possible to reverse the burden of proof at the time of acceptance: it is no longer up to the user to prove that the treatment does not work, but to the developer to prove that his project works.

To do this, it can rely on the test cases identified at the beginning of the project and which must be used as proof.

Benefits but for which projects?

It’s true that using a test tool increases the workload compared to using summary manual tests. But as soon as the project becomes complex or critical, this additional cost is quickly compensated by a decrease in the workload necessary for maintenance and by the additional benefits of this practice:

- Better quality of the deliverable

- Reduced time to production

- Reduced risk of regression in case of functional evolution

- Reduce non-compliant data in production and associated costs

- Active and early participation of customers/users in the definition of test cases

- Best performances

- Reduced correction time in the maintenance phase

- Facilitates maintenance by another team of developers

- Quick qualification of different platforms (Windows, Linux…) and FME versions

- Possible to work in pairs (FME developer and test scenario designer)

In the end, we recommend rTest for all projects that must meet robustness and quality requirements, especially if they are intended to be maintained for several years. More than the size and complexity, it is the criticality and the cost of the failure that must be evaluated.

Everyone will easily appreciate that an error in aeronautical, road or military data can have serious consequences, but on the other hand, there is no field that would escape without damage the use of erroneous data.

rTest is therefore intended to be widely used by the FME community in all fields of activity. Veremes continues to maintain the product for its own needs but we will be happy to receive feedback and expectations from developers, distributors and integrators engaged in projects to improve the quality of their production.